TARGON V6

03.31.25Targon Virtual Machine (TVM)

Confidential GPU Attestation and Monetization for Secure AI Workloads

Abstract

The Targon Virtual Machine (TVM) introduces a robust architecture for confidential computing designed specifically for secure AI workloads, enabling pretraining, posttraining, and inference operations to be securely executed on bare-metal servers. TVM achieves verifiable hardware and GPU attestation, ensuring privacy and integrity of deployed AI models. With integrated attestation layers leveraging NVIDIA's nvTrust framework, TVM provides cryptographic proofs of hardware security, facilitating trust among stakeholders and enabling monetization opportunities within confidential subnets.

Introduction

Artificial Intelligence (AI) has rapidly evolved into a critical infrastructure, necessitating stringent privacy and security guarantees, especially for sensitive models and data. The Targon Virtual Machine (TVM) addresses this by deploying Confidential Virtual Machines (CVMs) on bare-metal hardware, providing end-to-end security, integrity, and attestation of GPUs. Utilizing advanced attestation technologies, including NVIDIA’s nvTrust, TVM ensures that trained AI models remain confidential and secure, enabling controlled monetization of trained models in secure subnets.

TVM Architecture

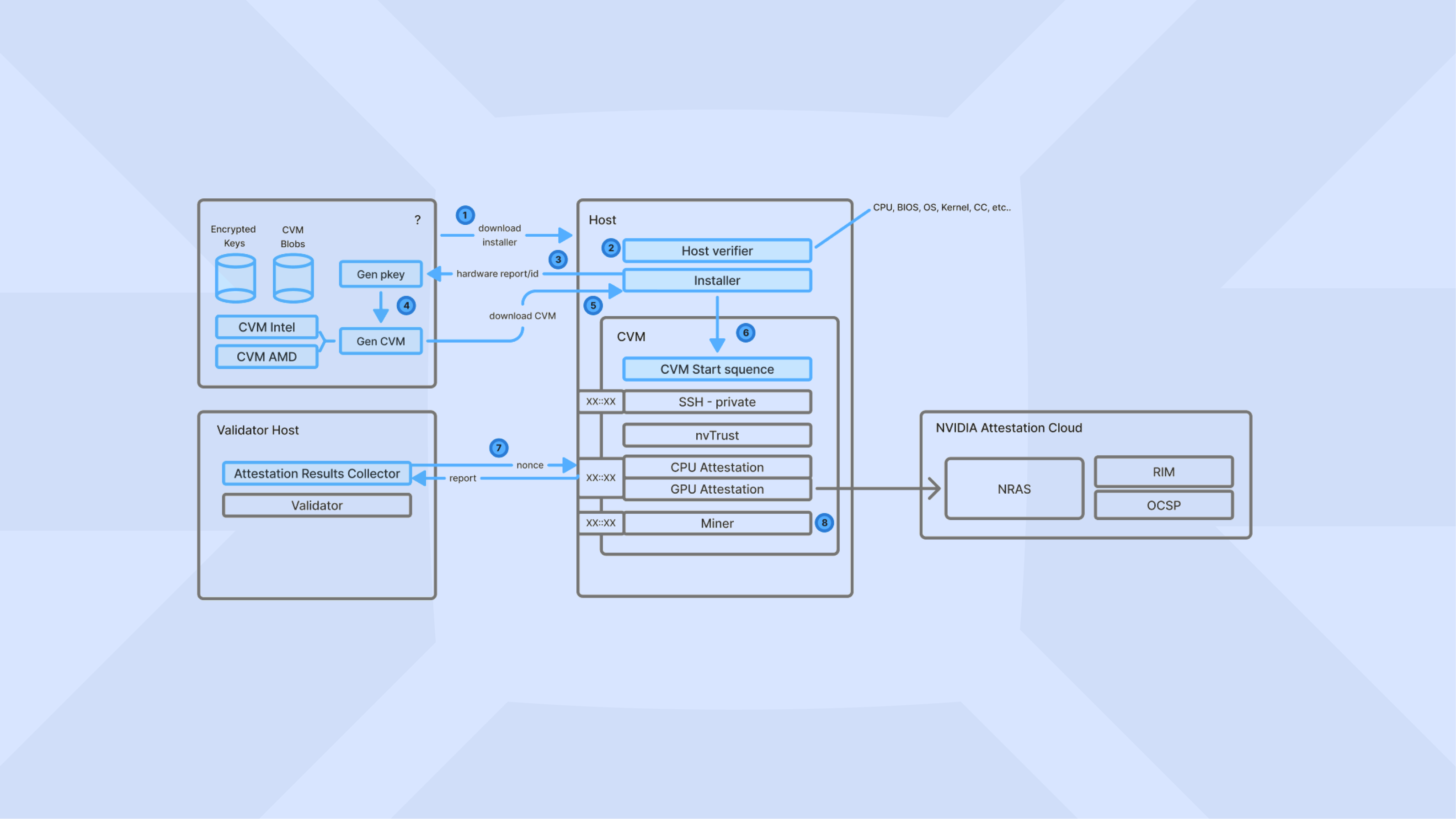

The TVM architecture integrates multiple layers of verification, attestation, and deployment:

- Host Verification

- Host Verification Attestation Service

- Confidential Virtual Machine (CVM) GPU Attestation

- Manifold SDK

1. Host Verification

Host Verification evaluates the physical server’s readiness for confidential computing, ensuring compatibility with Intel TDX or AMD SEV technologies, validating TPM 2.0 modules, Secure Boot status, BIOS configurations, and kernel-level protections against known CPU vulnerabilities. It generates a comprehensive JSON report with hardware identification, crucial for system attestation.

2. Host Verification Attestation Service

This service securely receives attestation reports from Host Verification, validates them against defined security criteria, and decides whether the host is trustworthy. Upon successful attestation, the service initiates automatic generation of Confidential Virtual Machines (CVMs), tracking progress and providing secure VM downloads. The service acts as a trusted intermediary, facilitating secure deployment workflows.

3. Confidential Virtual Machine (CVM) GPU Attestation

Inside each CVM, GPU attestation is performed to confirm GPU integrity and firmware authenticity. Leveraging NVIDIA's nvTrust SDK, the CVM GPU Attestation service:

- Generates JWT-based attestation tokens.

- Ensures cryptographic verification of NVIDIA GPU integrity.

- Communicates securely with external validators through REST APIs.

- Supports integration via robust IPC methods (named pipes) for inter-service communication.

4. Manifold SDK

The Manifold SDK standardizes data models, constants, and APIs across TVM's components, simplifying integration and ensuring consistent communication between verification and attestation services.

Confidential GPU Attestation Workflow

The end-to-end confidential GPU attestation workflow is as follows:

Step 1: Host Preparation

- The Host Verification tool runs pre-deployment, ensuring hardware compatibility.

- Verifies CPU features (Intel TDX/AMD SEV), TPM modules, BIOS settings, Secure Boot, and OS security.

- Produces detailed hardware identification reports.

Step 2: Host Attestation

- Reports are securely submitted to the Host Verification Attestation Service.

- This service evaluates hardware security and initiates CVM deployment for verified hosts.

- Provides real-time status monitoring for CVM generation.

Step 3: CVM Deployment

- Approved hosts receive provisioned CVMs.

- CVMs include GPU attestation tools, pre-configured to run securely on NVIDIA GPUs.

Step 4: GPU Attestation

- The CVM GPU Attestation service within the deployed CVM performs GPU attestation using NVIDIA’s nvTrust.

- It provides cryptographic tokens verifying GPU integrity, accessible via a secure REST API.

- External validators can query this API for trust verification.

Step 5: Secure AI Model Execution and Monetization

- Upon successful GPU attestation, the CVM environment is deemed secure.

- Users can safely run pretraining, posttraining, and inference workloads.

- Generated models remain confidential, allowing secure monetization via subnet marketplaces.

GPU Attestation Implementation Details

NVIDIA nvTrust Integration

The CVM GPU Attestation leverages NVIDIA's nvTrust framework through a robust

Python wrapper (attestation_wrapper.py). Key features include:

- Adaptive integration to accommodate different nvTrust SDK versions.

- Simplified command-line interface for flexibility in deployment.

- Compatibility handling for various nvTrust API signatures.

Communication with Go-based Attestation Service

A Go-based HTTP service wraps the Python-based nvTrust interaction, exposing a RESTful API. Named pipe IPC provides clean separation between attestation data and logging, facilitating error handling and reliability.

Output Format

GPU attestation results are provided in structured JSON:

{

"success": true,

"nonce": "nonce_value",

"token": "jwt_attestation_token",

"claims": {

/* JWT claims */

},

"validated": true,

"gpus": [

{

"id": "GPU-0",

"model": "NVIDIA H100",

"claims": {

/* GPU-specific claims */

}

}

]

}

This format enables straightforward integration with downstream services or blockchain-based validation mechanisms.

Host Verification and Attestation Capabilities

The Host Verification tool ensures comprehensive hardware attestation through:

- Detailed CPU capability checks for confidential computing technologies.

- TPM module verification for hardware-based cryptographic validation.

- Secure Boot configuration verification.

- Multi-layered Intel TDX BIOS verification via direct MSR access, kernel logs, and system files.

- Robust detection of hardware vulnerabilities and kernel-level mitigations.

Attestation Service Decision-making

The attestation service evaluates critical sections of submitted host reports, deciding on CVM provisioning based on pre-defined security standards:

- CPU capabilities (TDX/SEV)

- TPM 2.0 integrity

- Secure Boot configuration

- Security vulnerability mitigations

Upon successful verification, VM provisioning is automatically initiated.

Monetization Opportunities

The TVM framework enables secure monetization of AI models by ensuring that proprietary model code and weights remain confidential and are executed solely within attested, cryptographically secure environments. By leveraging cryptographic attestation combined with GPU-level verification via NVIDIA’s nvTrust, model developers can confidently deploy valuable proprietary AI models without risking intellectual property leakage.

Specifically, TVM facilitates monetization by enabling:

-

Secure Model Deployment:

Model developers can securely train, fine-tune, and serve their AI models privately, ensuring the model weights and associated intellectual property are never exposed. -

Trustworthy Inference Consumption:

Users and enterprises benefit from verifiable guarantees of hardware integrity and execution environment confidentiality, essential for deploying AI workloads in sensitive, regulated, or mission-critical applications. -

Attestation-based Monetization:

Trained AI models can be directly monetized within subnet marketplaces through attestation-based access control, creating secure, verifiable economic relationships between model providers and consumers without ever compromising model confidentiality.

This secure environment unlocks new business opportunities, fostering decentralized AI marketplaces built on trust and verifiable security.

Deployment and Management

TVM supports streamlined deployment, leveraging containerization and cloud orchestration technologies:

- Docker containers for CVM attestation services.

- Kubernetes manifests for cluster deployment and scalability.

- Comprehensive logging and monitoring for transparency and manageability.

Security and Compliance

TVM’s design emphasizes robust security:

- Cryptographic proof of hardware integrity.

- JWT-based tokenization for verification.

- Role-based access control and API authentication.

- Secure logging with sensitive data masking.

- Configurable security features suitable for regulated environments.

Conclusion

The Targon Virtual Machine (TVM) establishes a secure, confidential computing framework specifically tailored for AI workloads, providing robust hardware and GPU attestation through integration with NVIDIA's nvTrust SDK. By ensuring confidentiality and integrity, TVM enables trustworthy monetization of AI models, fostering secure, decentralized AI marketplaces.

Future Roadmap

- Integration of persistent storage solutions for large-scale VM management.

- Enhanced GPU attestation supporting evolving GPU technologies.

- Expansion to additional confidential computing technologies and compliance frameworks.

- Advanced authentication and real-time metrics collection for improved operational transparency.

TVM: Confidential Computing for Trusted AI Monetization

Empowering Secure AI Innovation through Trusted Hardware and GPU Attestation.

MANIFOLD LABS

© 2025 Manifold Labs, Inc. All Rights Reserved